Auf einen Blick

3D cameras based on time-of-flight PMD image sensors (Photonic Mixing Device) are a cost-effective technology for active, pixel-resolved distance measurement and already a key technology for assistance systems in mobile robotics. PMD cameras can effectively suppress the disturbing but constant proportion of extraneous light, such as sunlight. However, often an insufficient accuracy occurs when measuring the distance, for e.g. due to the influence of the reflectivity of the obstacle, the interference of multiple optical light paths (multiple path interference) and mixed pixels.

In the research project 3DRobust (Robust 3D Stereo Camera based on Time-of-Flight Sensors for the Improvement of Environment and Object Recognition in Autonomous Mobile Robots), a new type of 3D camera is being developed that has two PMD and RGB sensors on board level and calculates the distance data redundantly according to the "fail-operational" principle. The reliability of the distance data is increased through the fusion of two differently generated depth images and RGB images. The data fusion is to take place using convolutional neural networks (CNN), which are particularly suitable in the field of image processing. The camera prototype is to be tested for the application "autonomous control of an autonomously driving mobile robot". In particular, the number of false alarms in obstacle detection and false object classifications should be reduced.

RWU3D Dataset

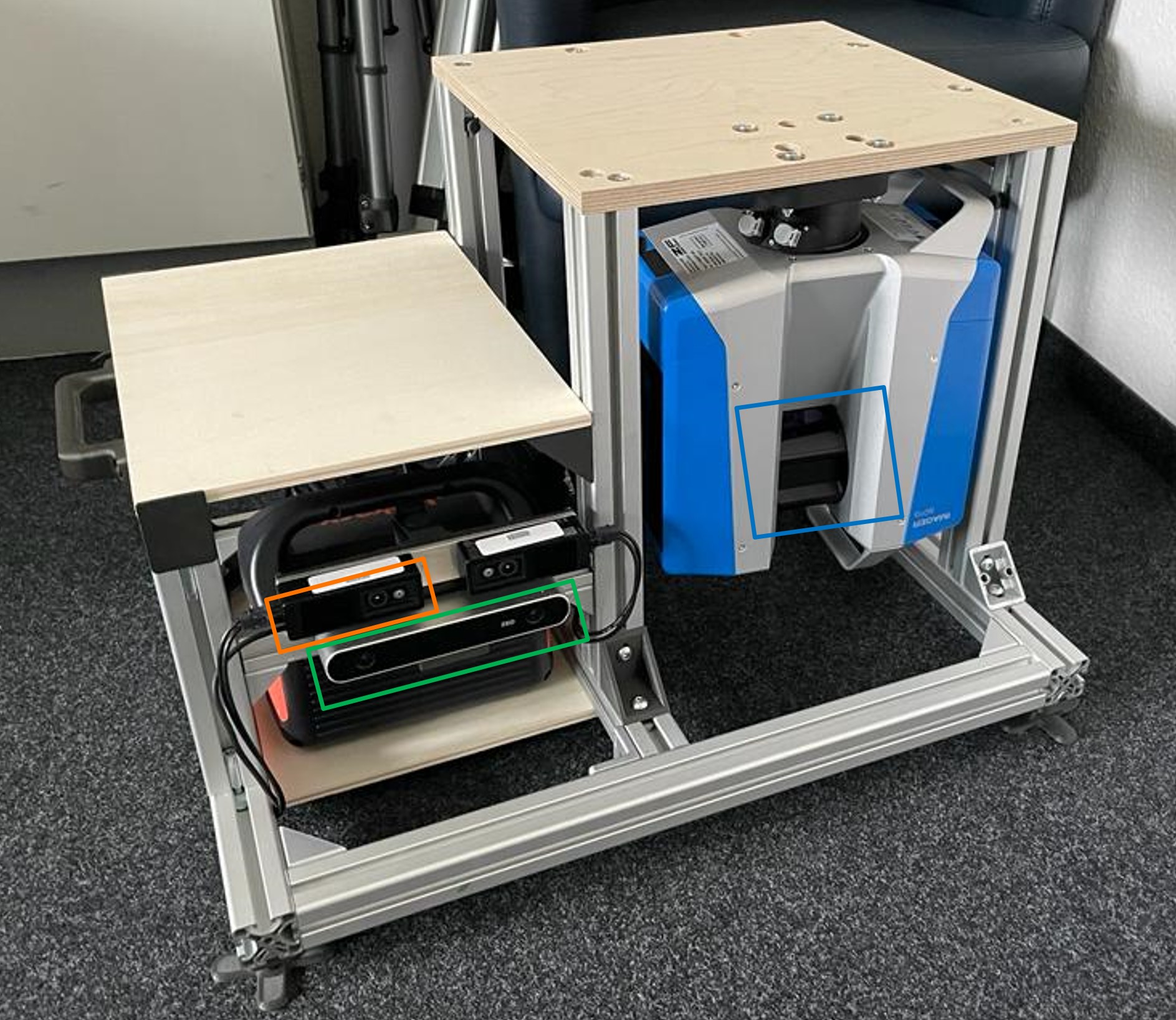

As part of the project, we created a real world dataset called RWU3D, which comprises of the ToF data after applying interpolation as well as RGB data along with the high resolution ground truth data. To capture the dataset, the fully mounted rig with the Z+F 3D laser scanner (marked in blue), O3R ToF camera (marked in orange) and ZED 2 stereo camera (marked in green) can be seen below. The image on the right shows the rig in final position while capturing a scene.

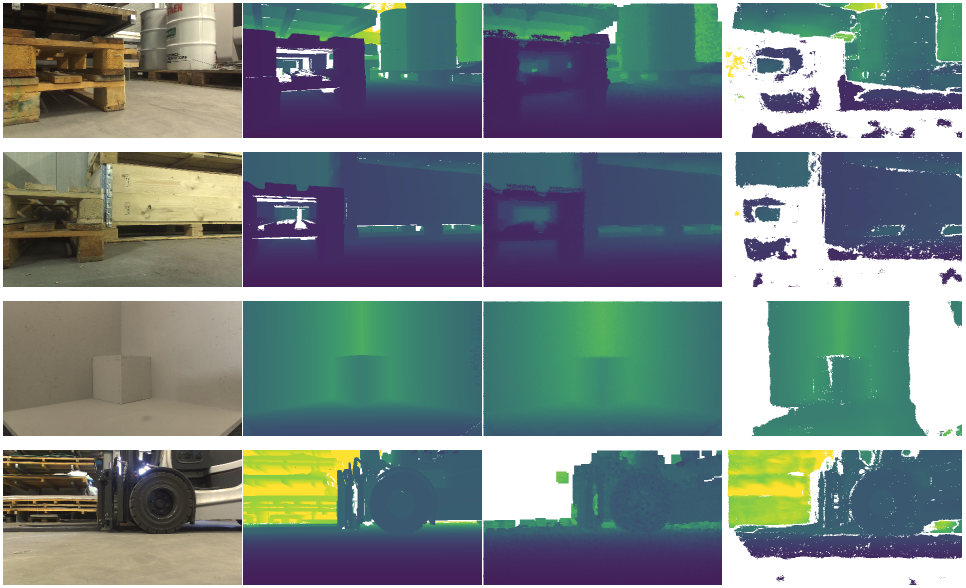

The dataset has 44 different scans/viewpoints with a total of 770 frames. Examples of the recorded frames from four different scenes can be seen below. The left RGB image, the Ground Truth, the ToF depth and the Stereo depth are visualized in the columns from left to right.

The following data is published for use:

- ToF amplitude

- ToF depth & disparity

- RGB left & right

- Stereo depth & disparity

- Ground Truth depth & disparity

The dataset is available in three resolutions, full, half, quarter and can be downloaded at the following link:

https://datasets.rwu.de/3drobust_rwu3d

(The access data can be requested from tobias.mueller@rwu.de )

The raw data before interpolation can be acquired by contacting any of the project members listed on the adjacent tab.

Project partners

|

ifm Unternehmensgruppe |

Projektteam

Project Lead

Project Team